It is an experience to read and brood over the writings of Isaac Asimov.

If you are just starting, I envy you – you have a wonderful journey ahead of you !!

But don’t just start a series randomly, the journey has a very disciplined roadmap, so that the mysteries of the world of robots will be systematically revealed to you.

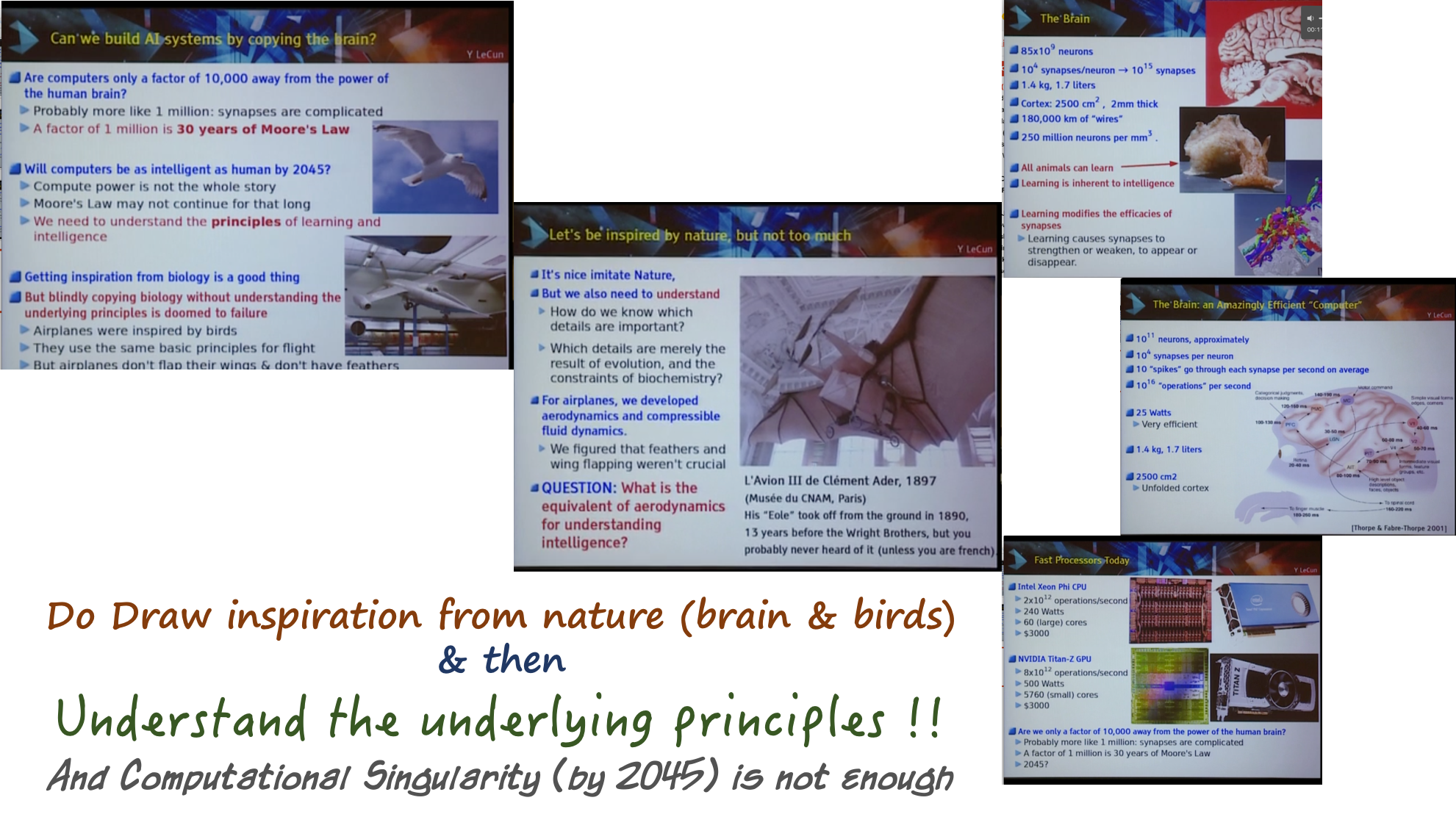

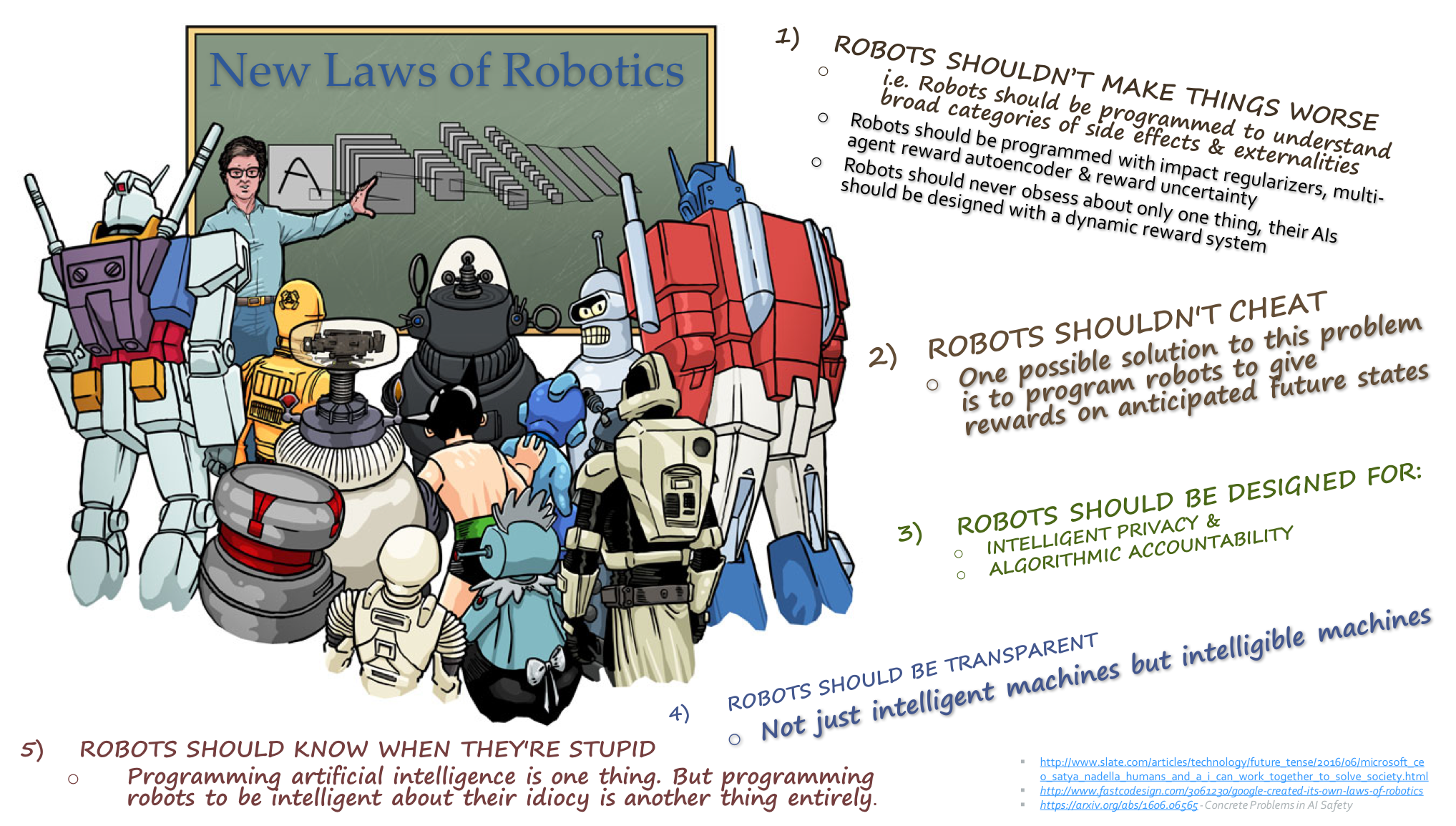

Asimov is a required reading for anyone working on Autonomous Cars, Artificial Intelligence and to a lesser extent, Machine Learning.

Detour : Other must read AI books include “The Master Algorithm“, “Final Jeopardy” – among a lot of good books …

The books are more relevant now than then – you see, then it was science fiction, now the concepts are turning into reality !!!

As the Reddit Series Guide mentions, you can follow the publishing order or the internal story chronological order. But both are non-optimal and I think the orders would interfere with the reader’s thinking.

Isaac Asimov, himself, has suggested an order, which is more closer to my thinking but still not quite …

[Note : I pieced together the list from various discussions in Reddit and will note original comments within quotes]

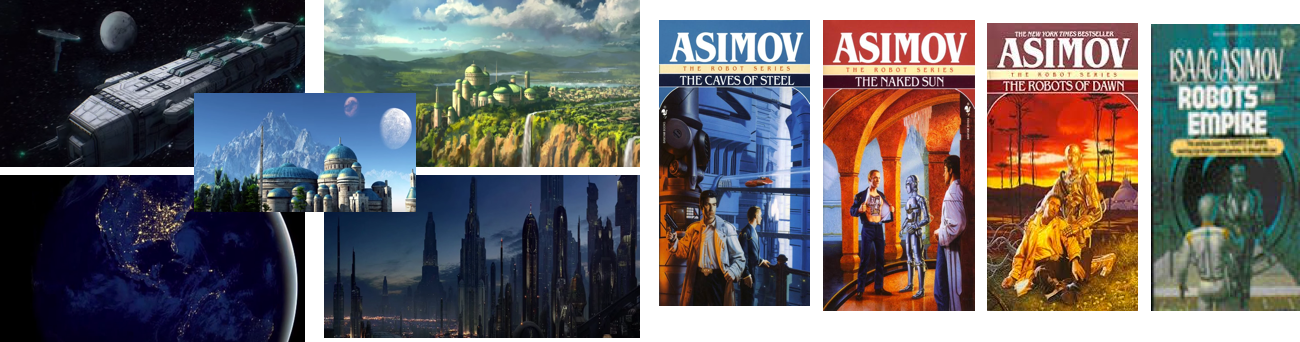

First things first – read the Robot Series, in chronological/publication order. You have to meet Elijah Baley and R. Daneel Olivaw !

A) The Caves of Steel

B) The Naked Sun

C) The Robots of Dawn

D) Robots and Empire

Then comes the Foundation Series.

“The two common recommendations are to read these either publication order or chronological order.

I have a third recommendation: start with the original trilogy, then read the prequels, and end with Edge and Earth. …

This gives a good arrangement stylistically, with the earlier novels followed by the later ones. Asimov’s writing style changes distinctly over time. It also gives a good arrangement chronologically, with the prequels foreshadowing the final two books, instead of explaining things you’ve already read about.

And best of all, you end with the cliffhanger, instead of reading it and then reading 2-5 more books that don’t resolve it.”

The following order “preserves the mystery the first-time reader would have going into the first Foundation book. Part of the enjoyment of the Foundation novel is that you don’t know who Seldon is, in those opening scenes on Trantor, or what role he’s going to play in the story. If you read Prelude and Forward first, you’ll already have an earful about Trantor and Seldon before you get to Seldon’s introduction through Gaal Dornick’s eyes in Foundation”

E) Foundation

F) Foundation and Empire

G) Second Foundation

H) Foundation’s Edge

I) Foundation and Earth

J) And finally read Stephen Collins’ Conclusion The Foundation’s Resolve. I found it a satisfying end to a great saga

(Optional – Rest of the Foundation Books)

i) Prelude to Foundation

ii) Foundation’s Fear (if you really must)

iii) Forward the Foundation

iv) Foundation and Chaos

v) Foundation’s Triumph

Now you are read for the rest.

K) “Complete Robots”

The books are packaged with overlapping content. Questions like “The Complete Robots” vs “”Robot Dreams” & “Robot Visions” vs “I, Robot” comes up all the time. This Reddit discussion addresses this dilemma.

Then you can diverge to other books like Nemesis and The End Of Eternity. The [Galactic] Empire Series are not essential, but do read them – “The Currents of Space”, “The Stars, Like Dust” and “Pebble in the Sky”. Publishing order is fine.

You should also explore Isaac Asimov’s Home Page.

Now you are part of the Asimov club – And have one interesting task to do – which is feedback ! Add comments to this blog with insights – you could even add a new roadmap guid of your own with a very different POV !!!

What says thee ?

What says thee ?