The background of this blog is the GPU Technology Conference’16 Amsterdam keynote by nVIDIA CEO Jen-Hsun Huang. Extremely eloquent, very knowledgeable, articulate and passionate – all great ingredients for a memorable keynote and you won’t be disappointed. He keeps the energy up for 2 hours – no curtains, no diversions, a feat on it’s own ! (P.S: I have heard from other folks that JH’s whiteboard talks are exponentially more informative and eloquent).

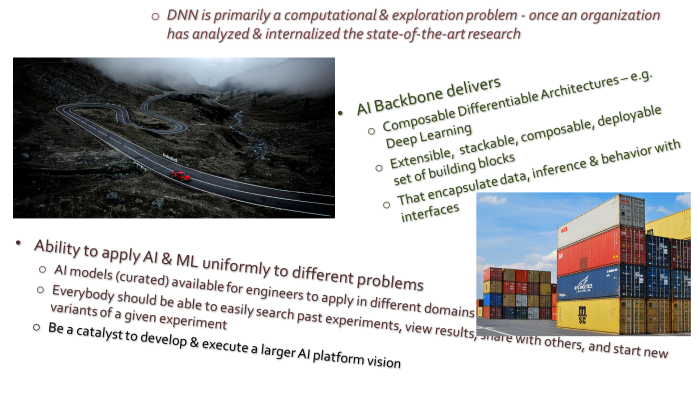

Popping the stack back to the main feature, the goal of this blog is to address a very thin slice of the talk – the AI backbone for a scalable infrastructure beyond the lab experiments. I had talked with many folks and the topic of a scalable AI pipeline/infrastructure gets lost in the hyperbole discussions on Artificial Intelligence and the rest …

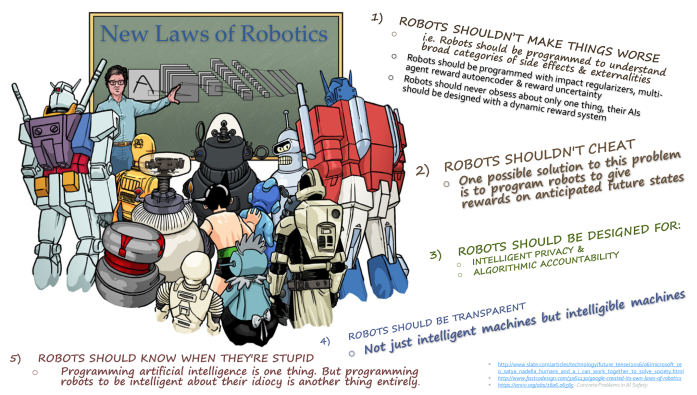

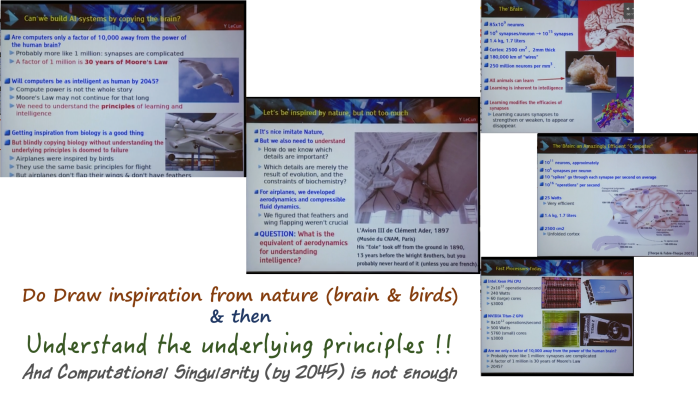

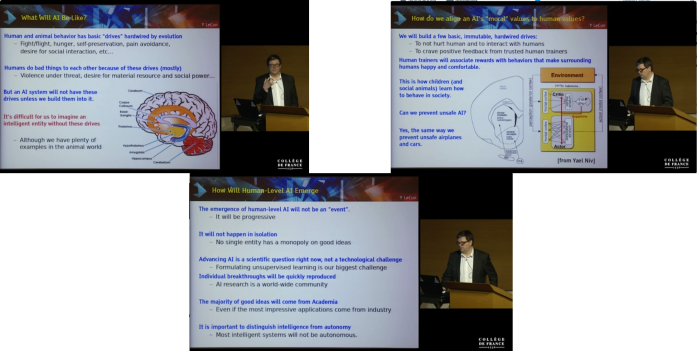

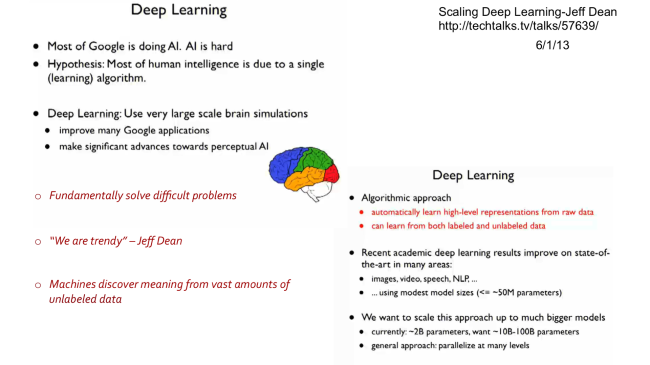

First let us take a quick detour and put down some thoughts on an AI Backbone in four visualizations, nothing less-nothing more ….

With this background, let us take a look at the relevant slides slides from Jen-Hsun Huang’s eloquent GTC’16 Keynote at Amsterdam.

I. Computing is evolving from a CPU model to a GPU Model

- Since 2012, GPU based systems have surpassed human level cognition in fields like image recognition, translation and so forth

There is a new computing model – the GPU Computing pipeline with four components:

- Training,

- The network master models a.k.a the AI backbone,

- Inferencing in the data center (AI applications) and

- Inferencing in IoT/devices (eg. autonomous cars)

II. Training

- This where new architectures are tested with large amounts of data, transfer learning with pre trained models and so forth.

- I like nVidia’s DGX-1 or AWS GPU clouds. You can also build a local cluster with nVidia GPUs like the Titan-X (like mine, below).

III. The Master Models

- Reference Models, curated data and other stuff live here. Engineers can interact with the models, morph it to newer domains (using Transfer Learning, for example) and so forth. They will train using the DGX-1s or GPU clouds

IV. Scoring the models at various contexts

- Now we reap the benefits of our hard work, the applications ! They have two flavors – either run in the datacenter or run on devices – cars, drones, phones et al.

- The Datacenter inferencing is relatively straightforward – host in a cluster or in the cloud. The hosting infrastructure can use GPUs.

- The device inferencing is a little more trickier – in my world, it is the Drive PX-2 for autonomous cars (you can see it in my picture of the desk)

The new Xavier Architecture is interesting – tastes better, less calories (er … power).

P.S: BTW, I like the view from the camera on the top right corner of the podium ! The slides have an elegant 3-D look !

V. Epilogue

Interesting domain, with a future.

“… something really big is around the corner … a brand new revolution, what people call the AI revolution, the beginning of the fourth industrial revolution … “

As you can see I really liked the keynote. Digressing, another informative & energetic presenter is Mobileye’s CTO/Chairman Prof. Amnon Shashua. I usually take time to listen and take notes.